AI is rapidly getting smarter. Each iteration brings better accuracy, fewer hallucinations, and broader knowledge. Soon, even our most challenging essay questions will be easy for machines to answer.

If students can easily generate sophisticated essays - complete with nuanced arguments and appropriate references - what motivates learning? Why deeply engage with readings when AI can craft compelling analysis? Why grapple with complex ideas when machines can explain academic debates?

We risk creating a generation of graduates who can submit excellent work while understanding very little. Ill-conceived assessments could jeopardise not just student learning, but their future careers.

We should act. But how?

Ban Tech?

We could revert to closed-book in-person assessments, writing on paper. Alternatively, we might allow students to use computers, without the internet.

Sure, that would prevent plagiarism.

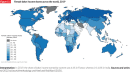

But at what cost? Might it discourage students from exploring Artificial Intelligence? I want my students to be at the technological frontier, carefully and critically marshalling the latest innovations. This is hugely important for gender equality - existing studies suggest women are 25% less likely to use Generative AI.

But I also want to ensure they become deep thinkers. This presents a conundrum. How can we encourage both deep learning and technological mastery?

Two-Stage Assessment: Essays & Interviews

The mode of assessment shapes the entire university experience. It signals what matters, influencing how students engage with their readings, structure their time, and develop key skills. Given this profound impact, we must ensure our assessments directly target deep learning.

For future years, I propose a combined essay and oral assessment. Students have 3 hours to write 2 out of 6 essay questions, followed by a video interview to explain their work. Not complex. Just a straightforward 10-minute conversation where they explain their argument, discuss their key references, and demonstrate their understanding.

This two-stage assessment process motivates precisely what employers want: the capacity to harness and direct technology while developing the in-depth understanding needed to explain those findings. More fundamentally, it rewards what makes us distinctly human - our ability to connect, communicate, and convince others through personal interaction.

Need to explain their work motivates students to forge crucial social skills. Clear and persuasive communication emerges naturally, as does mastery of complex ideas and quick thinking. Such competencies complement AI, prioritising interpersonal abilities.

For my two core modules, this means interviewing 170 students. Each conversation takes 10 minutes, with additional time needed for scheduling and administration. That is about four working days.

Fostering Deep Learning in an AI Age

As AI races forward, I want to be proactive, ensuring my assessments motivate students to become tech-savvy, deep thinkers and creative learners.

Your feedback and critique would be superbly useful.

Collectively, how might we refine this approach?

"If students can easily generate sophisticated essays - complete with nuanced arguments and appropriate references - what motivates learning?" This is a false dilemma. In the past students could still pay for another human to write their essay - and some did - this is no different from the best AI, except in the scale of the problem. Just like in elementary school we *should* ban calculators, because we want student to master the skills of basic arithmetic, likewise we *should* ban AI at the initial stages of learning how to write (ideally in high school, realistically in the early years of college/uni).

More broadly I agree that oral examinations are an increasingly crucial component to any at-home/technology-based written assignment. To these I add more and more in-class pen-and-paper-only essays. The combo of seeing students definitely-genuine writing (pen-and-paper under observation) AND orally examining them on at-home research seems to me to be the best solution. Ultimately, yes, they will integrate AI in their work, but to ensure they are the one's in the intellectual "driver's seat" we must be sure that they master the skills first, and temporary bans at the early stages of learning are useful and probably necessary.

Protesting calculator use seems pretty reasonable, actually. It's good to have a foundation in doing arithmetic yourself before you have the calculator do it. Doing arithmetic for yourself helps build useful mathematical intuition.

My favorite math teacher in HS would have 3 sections on every test: one section brain-only (no calculators or scratch paper), one section where scratch paper is allowed with no calculator, and finally a section where the TI-83 calculator is allowed. This was for relatively high-level courses covering topics like trigonometry and pre-calculus.

Imagine working at a job where your boss has zero hands-on experience in your line of work. You will quickly find yourself resenting your boss. Your boss will accidentally demand the impossible, or make claims that are "not even wrong", and you'll have to humor them.

The best boss has hands-on experience which gives them deep knowledge of the challenges faced by their workers. To become that boss, you put in the hours to gain hands-on experience. Same principle applies regardless of whether you're managing a human vs an AI. That's my take.